|

|

|

|

|

|

|

|

|

|

|

|

Baseline used: Wav2CLIP

Audio credits: Adobe Audition Sound Effects

Video credits: Pexels

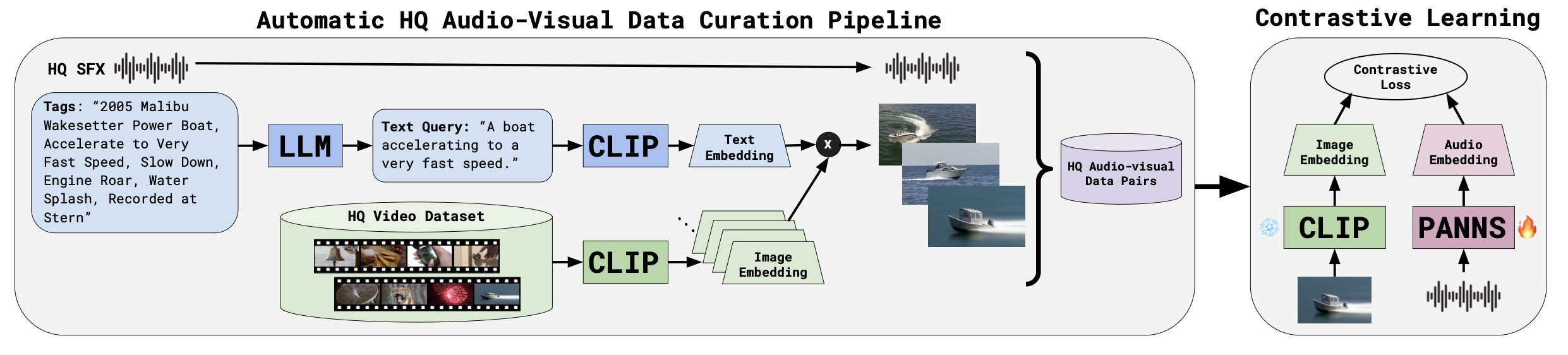

Finding the right sound effects (SFX) to match moments in a video is a difficult and time-consuming task, and relies heavily on the quality and completeness of text metadata. Retrieving high-quality (HQ) SFX using a video frame directly as the query is an attractive alternative, removing the reliance on text metadata and providing a low barrier to entry for non-experts. Due to the lack of HQ audio-visual training data, previous work on audio-visual retrieval relies on YouTube (``in-the-wild”) videos of varied quality for training, where the audio is often noisy and the video of amateur quality. As such it is unclear whether these systems would generalize to the task of matching HQ audio to production-quality video. To address this, we propose a multimodal framework for recommending HQ SFX given a video frame by (1) leveraging large language models and foundational vision-language models to bridge HQ audio and video to create audio-visual pairs, resulting in a highly scalable automatic audio-visual data curation pipeline; and (2) using pre-trained audio and visual encoders to train a contrastive learning-based retrieval system. We show that our system, trained using our automatic data curation pipeline, significantly outperforms baselines trained on in-the-wild data on the task of HQ SFX retrieval for video. Furthermore, while the baselines fail to generalize to this task, our system generalizes well from clean to in-the-wild data, outperforming the baselines on a dataset of YouTube videos despite only being trained on the HQ audio-visual pairs. A user study confirms that people prefer SFX retrieved by our system over the baseline 67% of the time both for HQ and in-the-wild data. Finally, we present ablations to determine the impact of model and data pipeline design choices on downstream retrieval performance. Please visit our companion website to listen to and view our SFX retrieval results.

Our proposed system, shown above, contains two core components:

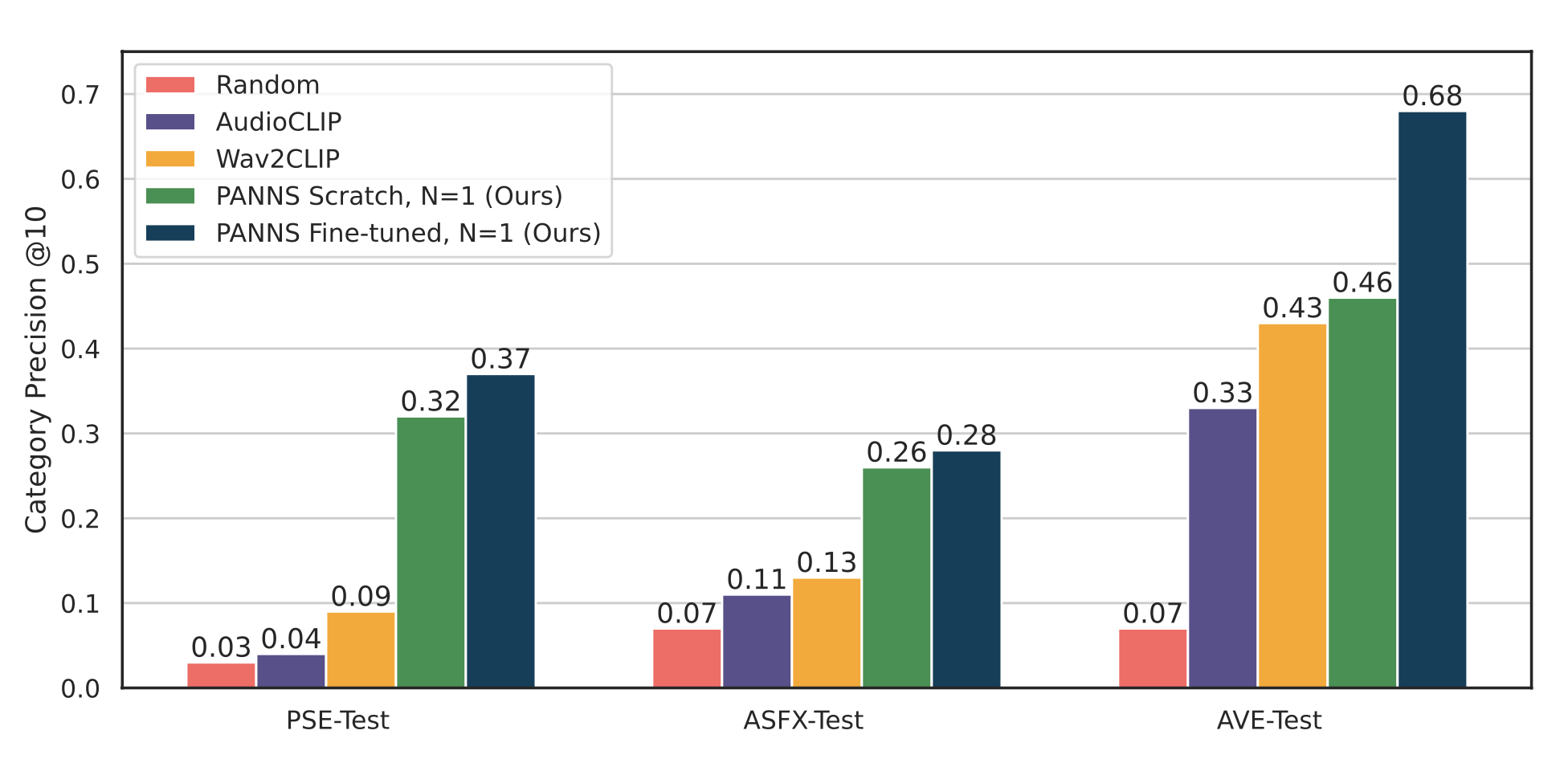

We evaluate our system against two strong pre-trained baselines capable of performing audio retrieval given a visual query: Wav2CLIP and AudioCLIP. We evaluate our proposed method and the baselines on three test sets (1) PSE-Test PSE Sound Effects paired with Adobe Stock Videos, generated using our proposed data curation pipeline, (2) ASFX-Test: Adobe Audition Sound Effects paired with Adobe Stock videos, generated using our proposed data curation pipeline, and (3) AVE-Test: A dataset containing real audio-visual pairs, i.e. "in-the-wild" videos. The results are shown below:

Our best model significantly outperforms the baselines across all three test sets. The results indicate two important takeaways: first, that our model is superior at matching HQ SFX to production quality video, our target application, as indicated by the results on PSE-Test and ASFX-Test. Second, and more surprising, our model successfully generalizes to noisy in-the-wild data, represented by AVE-Test, which is particularly interesting for our model trained from scratch as it was trained with only HQ auto-curated audio-visual pairs. Conversely, the baselines, which were trained on large in-the-wild video collections, fail to generalize to the clean audio-visual data. This validates our proposed data curation pipeline as useful for training models that generalize to both clean HQ data and noisy in-the-wild data.

User Study: We also perform a user study to validate our results qualitatively. We surveyed 28 users who each evaluated 30 comparisons of our retrieved SFX given a visual query versus the baseline, yielding 840 total unique comparisons. We found that participants preferred the SFX recommended by our model over the baseline 68.1% and 66.4% of the time for data from PSE-Test and AVE-Test, respectively.

@inproceedings{wilkins2023sfx,

title={Bridging High-Quality Audio and Video via Language for Sound Effects Retrieval from Visual Queries},

author={Wilkins, Julia and Salamon, Justin and Fuentes, Magdalena and Bello, Juan Pablo, and Nieto, Oriol},

booktitle={2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA)},

year={2023},

organization={IEEE}

}